Now Reading: What is Sapien (SAPIEN)? AI Native Knowledge Graph on Web3

-

01

What is Sapien (SAPIEN)? AI Native Knowledge Graph on Web3

What is Sapien (SAPIEN)? AI Native Knowledge Graph on Web3

As AI systems become smarter yet increasingly centralized, a critical question arises: Who creates the data and how can we trust its quality?

That’s the fundamental challenge Sapien (SAPIEN) seeks to solve. Built as an AI Native Decentralized Knowledge Graph Protocol on Web3, Sapien aims to restructure the entire value chain of artificial intelligence by turning human knowledge into verifiable, tokenized data for machine learning systems.

What is Sapien (SAPIEN)?

Sapien (SAPIEN) is an AI native Decentralized Knowledge Graph Protocol built on the Web3 foundation. It transforms collective human knowledge into AI training data and provides it to enterprises. This is a bold and groundbreaking concept, one that no previous project has explored.

Moreover, the project aims to redefine how knowledge is created, verified, and owned in the age of artificial intelligence. Instead of allowing data and insights to remain locked inside centralized AI platforms, Sapien builds an open infrastructure where every piece of information, fact, or insight exists as a “Knowledge Node”, stored and verified on chain.

Through its Proof of Knowledge (PoK) mechanism, a consensus model that validates accuracy and value, Sapien converts information into a programmable and shareable digital asset. Contributors earn transparent rewards in SAPIEN tokens, directly tied to the quality of their work.

In addition, Sapien offers a different path, transforming data contribution into a transparent, rewardable activity. Rather than letting “AI learn from you for free,” Sapien creates a decentralized data foundry, where anyone can contribute insights, context, or validation tasks and earn SAPIEN tokens in return.

Ultimately, Sapien democratizes the data pipelines that power AI, aligning incentives across contributors, validators, and organizations that rely on verified, high-integrity data. These nodes form an open and interconnected knowledge network, where both humans and AI can query, expand, and collaborate seamlessly.

What is Sapien (SAPIEN)? – Source: Sapien

The Architecture of Decentralized Data Integrity

In an era where data fuels artificial intelligence, quality has become a matter of trust, not just technique. Most AI training pipelines still rely on centralized QA teams, manual reviewers, and static heuristics, systems that are opaque, costly, and unable to scale with complexity.

Sapien emerges as a structural rethinking of that paradigm, not by improving centralized QA, but by replacing it with a decentralized protocol of trust: the Proof of Quality (PoQ).

Proof of Quality (PoQ) – A New Constitution for AI Data Integrity

Proof of Quality (PoQ) is the foundation of the Sapien ecosystem. It replaces centralized quality assurance with a distributed, transparent system where quality becomes a measurable on-chain signal, backed by financial collateral and clear accountability.

Moreover, PoQ operates through four core pillars, creating a self regulating trust economy.

- Staking (Financial Commitment): Contributors must stake SAPIEN before performing any task. The size and duration of the stake directly determine access to higher tier assignments and reward multipliers. If performance drops, low quality work results in partial or full slashing of the stake.

- Validation (Peer Review): Completed work undergoes review by peers with higher reputation scores. Accurate validators earn rewards, while incorrect approvals receive penalties. In addition, critical tasks may require multi layer validation to ensure fairness and objectivity.

- Reputation (On chain Merit Record): Each participant builds a public reputation profile based on accuracy, task volume, and peer feedback. This record cannot be purchased; it must be earned through consistent, high quality performance over time.

- Incentives (Rewards & Accountability): Contributors earn SAPIEN and USDC rewards proportional to task complexity, peer relative performance, and staking duration. Consequently, high performers gain greater access, while underperformers face slashing, reduced privileges, and slower progression.

Ultimately, PoQ transforms trust into a quantifiable economic signal. Every action has a cost, every quality output generates yield, and every error leaves a trace of financial accountability.

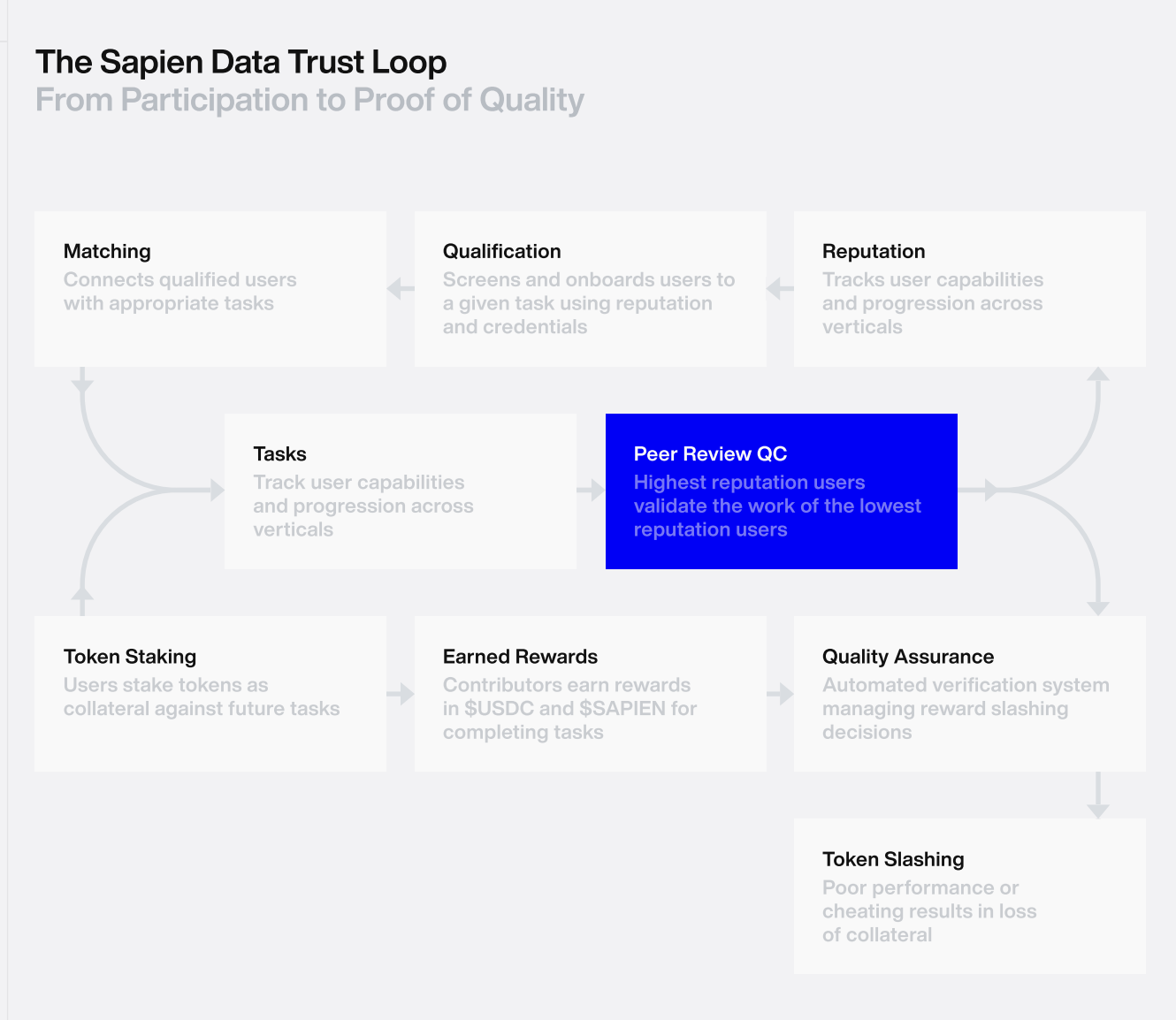

The Sapien Data Trust Loop – Operationalizing Quality on-chain

If PoQ defines the constitution of trust, the Sapien Data Trust Loop is the machine that enforces it, a self reinforcing cycle where every contribution, validation, and reward is coordinated through transparent, on chain logic.

The loop translates the principles of PoQ into an operational reality: a continuous workflow that turns participation into proof, proof into reward, and reward into reputation. Each iteration strengthens the network’s overall integrity.

The Sapien Data Trust Loop – Operationalizing Quality on-chain – Source: Sapien

The Sapien Data Trust Loop consists of eight dynamic stages:

- Matching: Connects qualified users with relevant tasks using on chain reputation and verified credentials.

- Qualification: Screens and onboards contributors, validating their domain expertise before access.

- Token Staking: Participants lock SAPIEN as collateral against the quality of their upcoming work.

- Tasks: Contributors execute assignments: labeling, reasoning validation, RAG source vetting, or higher-order cognitive evaluations.

- Peer Review QC: High reputation contributors validate the work of lower reputation peers, creating a layered quality structure.

- Quality Assurance & Slashing: The protocol aggregates validation outcomes to determine rewards or penalties; poor performance triggers slashing.

- Earned Rewards: Successful contributors receive USDC and SAPIEN proportional to complexity and accuracy.

- Reputation Update: Performance data is recorded on-chain, directly influencing the contributor’s eligibility and matching in the next cycle.

This closed loop system turns data production into a verifiable economic process, ensuring that each piece of information carries a traceable record of effort, validation, and accountability.

Where Quality Becomes an Economic Structure

The Synergy Between PoQ and the Data Trust Loop

What truly distinguishes Sapien is not its individual mechanisms but the synergy between Proof of Quality (PoQ) and the Data Trust Loop. Together, they create a self correcting ecosystem where behavioral economics, consensus design, and AI data operations intersect seamlessly.

Moreover, PoQ serves as the philosophy of enforcement, defining what verifiable quality means. In contrast, the Data Trust Loop acts as the execution engine, applying that philosophy across millions of contributors.

Each cycle of the loop produces a “proof-backed data unit”, a verified piece of knowledge directly linked to its creator, validation history, and financial guarantee.

Data as a New Economic Asset

Furthermore, the implications go far beyond data accuracy. Sapien transforms data into a new class of economic asset. Enterprises accessing Sapien’s datasets through API or marketplace no longer purchase raw data. Instead, they acquire datasets with cryptographic provenance, complete with authorship, validator identity, and quality guarantees secured on chain.

From an economic standpoint, Sapien introduces a novel form of Proof of Work where the “work” represents knowledge mining rather than block mining. The reward system extends beyond tokens to include reputation, access, and verifiable social capital.

A Paradigm Shift in AI Data Infrastructure

Consequently, the fusion of PoQ and the Data Trust Loop marks a turning point in AI data infrastructure.

-

Quality assurance evolves from a centralized department into a permissionless protocol governed by incentives and penalties.

-

Data workers shift roles, moving from anonymous contractors to stakeholders in a verified knowledge economy.

-

Every dataset becomes a responsible digital asset, traceable, auditable, and economically accountable.

The Foundation for a Decentralized Data Future

Ultimately, as the world demands larger and more ethically grounded AI datasets, Sapien’s model lays the groundwork for a Decentralized Data Infrastructure (DDI). In this future, trust is not outsourced to institutions but encoded directly into transparent, auditable protocol logic.

The Commercial Layer of “Passported Data” in the Sapien Ecosystem

Once Proof of Quality (PoQ) and the Data Trust Loop transform quality into a verifiable on-chain signal, the next question naturally arises: How does that trust translate into real economic value?

The answer lies in Sapien Marketplace where data becomes a verifiable, tradeable asset and Sapien Solutions, the layer where enterprises and AI systems can directly deploy verified data for complex, real world use cases such as robotics, autonomous systems, and governance AI.

Sapien Marketplace

The Sapien Marketplace represents the commercialization layer of the ecosystem where data transitions from an internal pipeline to a transparent, auditable, open marketplace.

Unlike traditional data labeling or crowdsourcing platforms that keep datasets behind corporate walls, Sapien’s marketplace brings the entire value chain on chain, exposing provenance, validation, and economic accountability for every contributor and dataset.

The Economy of Verified Knowledge

Each dataset or task listed on the marketplace carries three verifiable properties:

- Provenance: Every data point comes with an immutable record of its creator, validator, and staking commitment.

- Validation: Peer review and PoQ verification records are visible on chain.

- Accountability: Contributors and validators are financially responsible for data accuracy, mislabeling or malicious work leads to slashing.

Enterprises, research institutions, and AI labs can access these verified datasets directly via API or managed dashboards. What they acquire is not just raw data, but “passported data”, data that carries its own on chain credentials: who created it, who verified it, and how its quality was financially guaranteed.

Expert Reasoning

One of Sapien’s most distinctive features is the Expert Reasoning Layer, a specialized domain within the marketplace. It focuses on high-context cognitive tasks, the kind of reasoning and judgment that current AI models still struggle to perform autonomously.

Moreover, this layer connects human expertise with machine intelligence, allowing experts to evaluate, refine, and guide AI decision-making in real time. As a result, Sapien builds a bridge between human intuition and algorithmic logic, ensuring that complex reasoning remains accurate, explainable, and ethically grounded.

These include:

- Chain of thought reasoning evaluation

- RAG (Retrieval Augmented Generation) source validation

- Alignment and subjectivity scoring

- Multi turn dialogue validation

Only contributors with the highest on chain reputation (Experts and Masters) gain access to these assignments, earning rewards in SAPIEN and USDC based on accuracy, complexity, and peer validation performance.

This creates an emerging knowledge economy, where humans and AI co produce and refine data together. Each validation loop not only yields new datasets but also enhances the collective intelligence of the network itself, turning Sapien into the world’s first tokenized, decentralized AI training infrastructure at scale.

Sapien Solutions

If the marketplace is where data is traded, Sapien Solutions is where data is applied. It connects the PoQ verified datasets with real world industries that require accuracy, contextual nuance, and accountability at the data layer.

Key verticals include autonomous systems, robotics & computer vision, AI reasoning & governance

Autonomous systems

3D bounding box annotation, LIDAR segmentation, and frame to frame object linking: These datasets are foundational for self driving cars, industrial robotics, and spatial intelligence. With PoQ verification, each data point is peer reviewed and staked, ensuring the reliability required for real world machine perception.

Robotics & computer vision

Mesh repair, texture labeling, occlusion tagging: In robotics, even minor labeling errors can lead to catastrophic system failure. PoQ ensures validators and contributors have skin in the game, every label is economically guaranteed for precision.

AI reasoning & governance

Tasks in toxicity scoring, bias detection, and regulatory alignment: These build the backbone for ethical, transparent, and auditable AI systems, where value and accountability coexist. All Solution layer processes are powered by the same PoQ mechanism, meaning whether it’s 3D spatial mapping or reasoning evaluation, every piece of data is tied to stake, peer validation, and on chain reputation.

Turning Verified Trust into Real World Value

The launch of Sapien Marketplace and Sapien Solutions converts PoQ from a protocol into a living economic system:

- PoQ + Data Trust Loop: Create verified, “passported” data.

- Sapien Marketplace: Commercialize that verified data, turning trust into liquidity.

- Sapien Solutions: Deploy verified data into real world industries, cycling value back into the network.

Together, they form a closed feedback loop of trust, data, value, and application where AI learns from humans, humans are rewarded by the protocol, and both improve through each iteration.

Sapien Marketplace and Sapien Solutions are the logical extensions of Proof of Quality where verified trust turns into tangible economic output. From staking to datasets, from peer validation to autonomous systems, every layer of Sapien operates under one philosophy: Quality is an asset, trust is capital, and data is the new market of Web3.

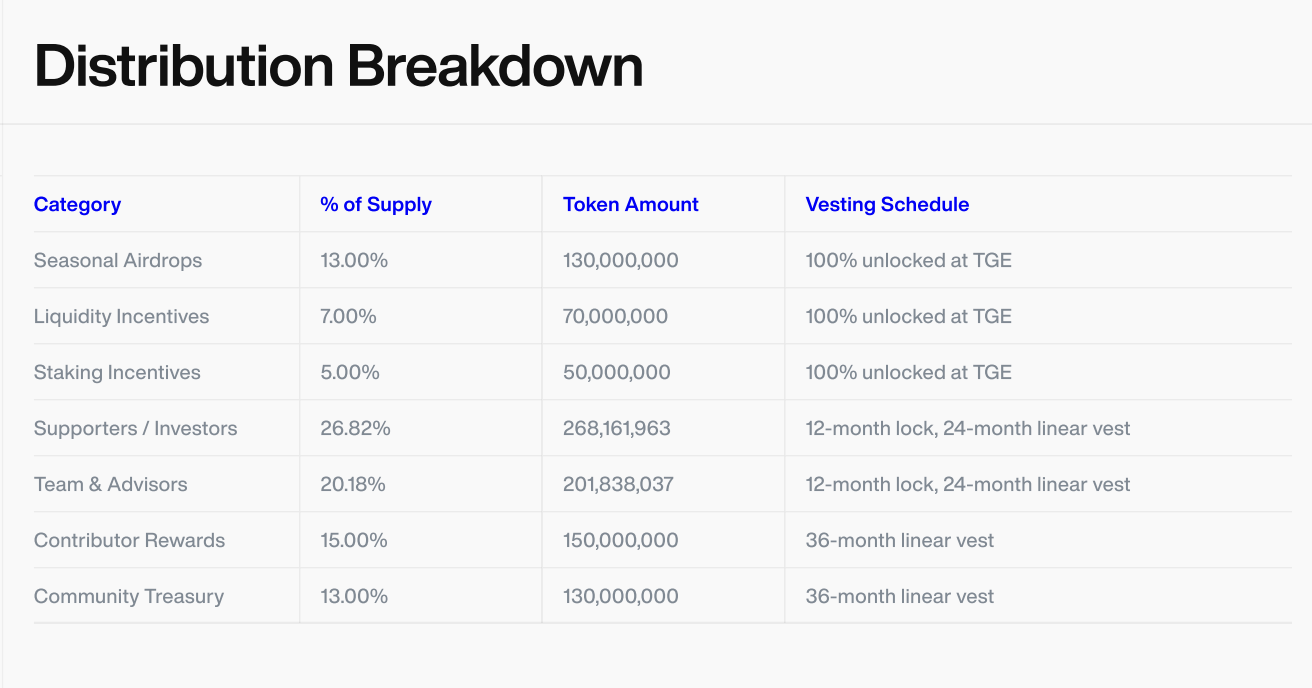

Tokenomics

The SAPIEN token is designed as a self balancing financial structure that sustains three critical objectives: early participation incentives, long-term economic sustainability, and transparent value distribution.

Unlike many project tokens used only for transactions or governance, SAPIEN embodies a mechanism of trust, anchoring Sapien’s entire architecture in economic accountability. In Sapien, knowledge creation isn’t free: contributors must have financial skin in the game, and rewards are distributed proportionally to verifiable on chain quality.

Tokenomics

Sapien’s total supply is 1,000,000,000 SAPIEN, a fixed amount with no inflationary minting, ensuring long-term transparency and scarcity.

Token Allocation

- Seasonal Airdrops: 13%

- Liquidity Incentives: 7%

- Staking Incentives: 5%

- Supporters and Investors: 26.82%

- Team & Advisors: 20.18%

- Contributor Rewards: 15%

- Community Treasury: 13%

Read more: Sapien (SAPIEN) Will Be Listed on Binance HODLer Airdrops!

How to Buy SAPIEN

Start by identifying verified listings of SAPIEN on reputable platforms such as Binance, KuCoin, or Uniswap (for Ethereum based liquidity pools). Always double check the official contract address via Sapien’s website or documentation before trading.

- Buy SAPIEN: Search for trading pairs such as SAPIEN/USDT, SAPIEN/USDC, or SAPIEN/ETH. Enter your desired purchase amount, review the transaction details, and confirm your trade.

- Participate in the Ecosystem: Stake your SAPIEN on the official Proof of Quality (PoQ) dashboard to access contributor or validator roles. By doing so, you transform token ownership into active participation in the decentralized knowledge economy where your contribution directly shapes the evolution of Sapien’s data infrastructure.

FAQ

What is Sapien?

Sapien is an AI native decentralized knowledge protocol that transforms collective human intelligence into verified training data for artificial intelligence. Built on Web3, it allows anyone to contribute knowledge, validate data, and earn rewards based on accuracy, creating a transparent infrastructure where data quality, trust, and ownership are enforced by the blockchain.

What makes SAPIEN unique among AI tokens?

Most AI tokens focus on computing resources or model access. SAPIEN, by contrast, tokenizes data quality, the fundamental layer that powers all AI systems. It is the first protocol to unite staking, validation, and on chain reputation into a single verifiable model of knowledge creation.

Is Sapien just a data labeling platform?

Not exactly. Sapien is a full stack decentralized knowledge infrastructure, encompassing not only labeling tasks but also reasoning evaluations, compliance checks, 3D/4D data processing, and AI governance workflows. It’s not merely a platform for annotation. It’s a living framework for human AI collaboration.

How does Sapien ensure data quality?

Sapien enforces data quality through its Proof of Quality (PoQ) mechanism. By integrating staking, peer validation, and on chain reputation, PoQ turns quality into an economic signal. Every contributor has financial accountability, and every dataset carries cryptographic proof of accuracy replacing subjective review with objective, on chain verification.

What’s the difference between Sapien Marketplace and Sapien Solutions?

The Marketplace is where verified, on-chain datasets are listed, priced, and monetized. Solutions represent the application layer where verified data is deployed in real world industries such as robotics, autonomous systems, healthcare, and AI governance.

Together, they form a closed value loop of trust → data → value → application.

How can I earn SAPIEN?

You can earn SAPIEN by contributing or validating datasets, completing reasoning tasks, or participating in DAO governance. Each task is governed by PoQ, your earnings increase with accuracy, consistency, and reputation level.

What role does AI play in Sapien?

AI Agents within Sapien’s Agent Layer interact with human contributors, performing validation, reasoning, and contextual analysis. They co-train alongside humans, continuously improving both human and machine performance. This symbiosis forms the foundation of decentralized intelligence, a network where humans and AI grow together through verified knowledge.

Source: NFT Evening